Following on from Ben’s post on Technical SEO, I thought in this month’s blog post I’d look at search engine robots and how to control them. Now I’m sure that even any SEO newbies reading this will have some idea of how sites are crawled and indexed, but for the purposes of this post I’ll begin with a brief description of how that all goes down.

Robots and Spiders and Bots, Oh My!

Search engines find content on websites by crawling them. This is done by sending in robots/spiders/bots etc. that crawl the site and follow links within the pages looking for new content to index. The spiders read the content on each page and attach it to a URL. The information is indexed and, hey presto, you are now able to search for this content via the search engines.

Now, this is all great and a major part of SEO is optimizing websites so that search engines robots can easily crawl pages and index content. But what if we have something on our website that, for some reason, we don’t want the search engines to be able to read?

There are a number of reasons that this may be a possibility and many different ways that you can hide content from search engines. Rand Fishkin has a post on SEOmoz with a rundown of these. But today I’m going to talk a bit about controlling robots, specifically with the robots.txt file and meta robots.

Robots.txt

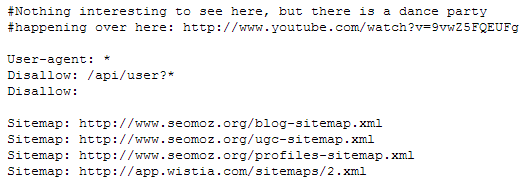

Robots.txt is a file placed in the root directory of a website. It holds a list of the pages that you don’t want search engines to access.

Robots.txt is great for keeping pages out of the index as it will prevent crawling and indexing. It can’t, however, prevent URLs that are found on other pages on the web from displaying in the index. If there is no robots.txt file then it is assumed that robots can access any area of the site.

Creating a robots.txt file is fairly straight forward and requires you to define the user-agent (or the robot you are targeting. Googlebot, for example) and which folders the search engine bots are not allowed to crawl. Below is an example of a robots.txt file.

User-Agent: Googlebot

Disallow: /assets/

Disallow: /logs/

Sitemap: https://www.yoursitehere.com/sitemap.xml

The “Disallow: /assets/” command will prevent search engines from accessing everything contained within the /assets/ folder on your computer.

Note the sitemap addition. This should be added to the same file so that search engines can locate it easily. If your website has multiple sitemaps you can enter these in succession.

Tips for Using Robots.txt

The user-agent can be defined as ‘*’ which indicates all robots as opposed to a specific one.

If you want to block search engine spiders from your whole website you enter ‘Disallow: /’. Entering this will include your whole website as content that you want to be blocked from search engine crawlers.

RobotsTxt.org has some great resources such as a robots database with a list of robots and a robots.txt checker to check your file and meta tags.

Meta Robots

The meta robots tag is a line of code which is entered into the <head> of a webpage. It’s relatively simple to implement and also allows you to remove content from the index. It works well for blocking content on specific pages but can be harder to put into place on a larger scale.

Adding the meta tag into your HTML is straight forward and comprises of the tag, name (“ROBOTS”) and the content (the commands). Google suggests that meta commands are all placed within one meta tag. This helps to make them ‘easy to read and reduces chance for conflicts’. Below is an example.

<meta name=”robots” content=”noindex, noarchive, noodp”>

The following are the commands and their definitions.

noindex: This page shouldn’t be put in the index or should be removed from the index.

nofollow: The links on this page shouldn’t be followed.

nosnippet: A snippet of the page or a cached version shouldn’t be shown in the search results.

noarchive: A cached version of the page shouldn’t be shown in the search results.

noodp: The title and description from the Open Directory Project shouldn’t be used in the search results.

none: This command is the equal to “nofollow, noindex”.

Robots.txt, along with meta robots, is one of the most common ways of controlling what content on a site is crawled and hopefully this post on robot basics is a helpful introduction into this aspect of Technical SEO.