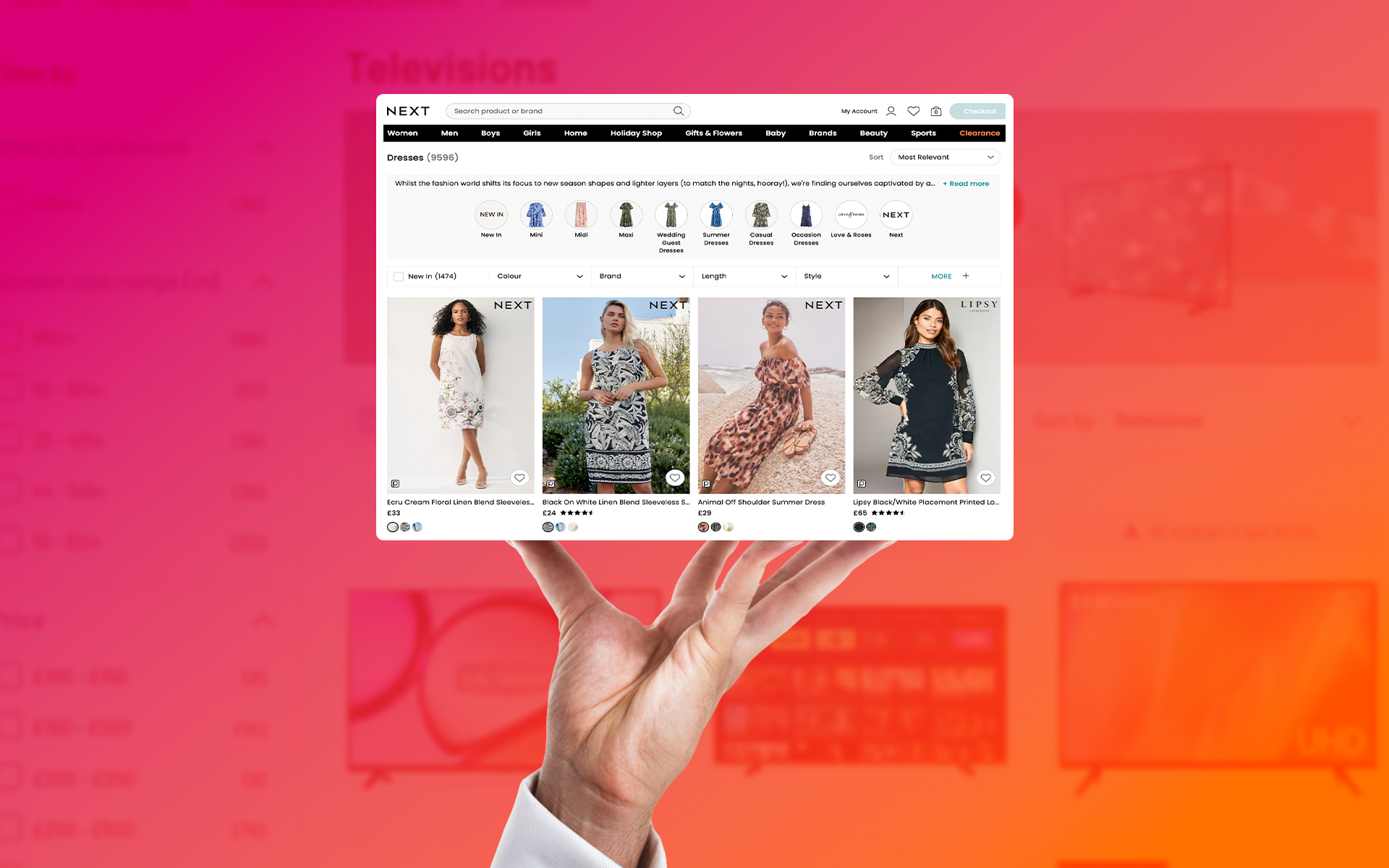

Something we regularly come across here at Boom are crawl issues with e-commerce websites. These usually arise from some combination of pagination (e.g. multiple pages of products in the same category), duplication (e.g. items that appear in multiple categories) and query parameters (e.g. changing the sort order or filtering the list of products). Occasionally we get some extra fun when a developer throws some AJAX into the mix (that’s Asynchronous Javascript And XML – the stuff that makes things happen in web pages without reloading the whole page, e.g. Google Maps, Facebook etc.) Why are these such an issue for search engines, you may wonder? Several reasons:

- Not getting indexed: If a search engine can’t reach a page, it can’t be indexed and nobody will find it via searching.

- Duplicate content: Known since Google’s first Panda update to have the potential to cause a penalty, you really don’t want to present Google with multiple URLs for identical or very similar content.

- Crawl budget: A search engine only expends so much effort to crawl your site when it visits, known as the crawl budget. You don’t want to waste your crawl budget on many versions of the same page, at the expense of new pages getting crawled and hence less than 100% of your important pages being available via search.

- Landing pages: If a search engine has multiple URLs for the same page, you have no control over which one it will present in a search result. Would it be the best outcome if a user clicked from a search to your category page listing products with the most expensive at the top?

Let’s dive in to some of the common issues that can cause these problems…

Query Parameters

These could be in use for a number of reasons, most commonly to implement sort orders and filtering. Often, you will see query parameters on the end of URLs following a question mark, e.g. https://www.patra.com/category/womensthermals?SortBy=2. The issue with this approach is that you can’t be sure whether search engines will crawl or ignore these – search engines try to understand whether the parameters affect the page content, but you can’t guarantee they will get it right . In this example, the products are being ordered by lowest price , meaning the content is likely to be very similar to other options such as order by highest price (particularly if there is only one page of products); as such, we wouldn’t want all of the sorting option URLs to be indexed.

However, in this example, we also have more than one page of products. Clicking to the second page gives us this URL: https://www.patra.com/category/womensthermals?SortBy=2&Page=2

So now we have a query parameter controlling pagination as well, which we do want indexing. We still don’t want the different sort orders indexed though, so what’s to be done? Ideally, three things:

- Canonicalisation: Use the canonical tag to tell Google that whatever version of the URL it happens to be crawling right now, there is in fact only one it should use and treat all the others as being one and the same. In the case of multiple parameters, only keep the ones that you do want indexing, such as pagination. In the example above, this would be: <link rel=”canonical” href=”https://www.patra.com/category/womensthermals?Page=2″>

- Rel next & prev tags: Google also provides tags to help it understand when a page is part of a paginated sequence and where in that sequence it belongs. In a nutshell, you have only a rel=”next” tag on the first page, only a rel=”prev” tag on the last page, and both on the pages in-between, each referencing the relevant previous and next URLs in the sequence. In the case of our example page number 2 in the sequence, these would be: <link rel=”prev” href=”https://www.patra.com/category/womensthermals”> <link rel=”next” href=”https://www.patra.com/category/womensthermals?Page=3″>

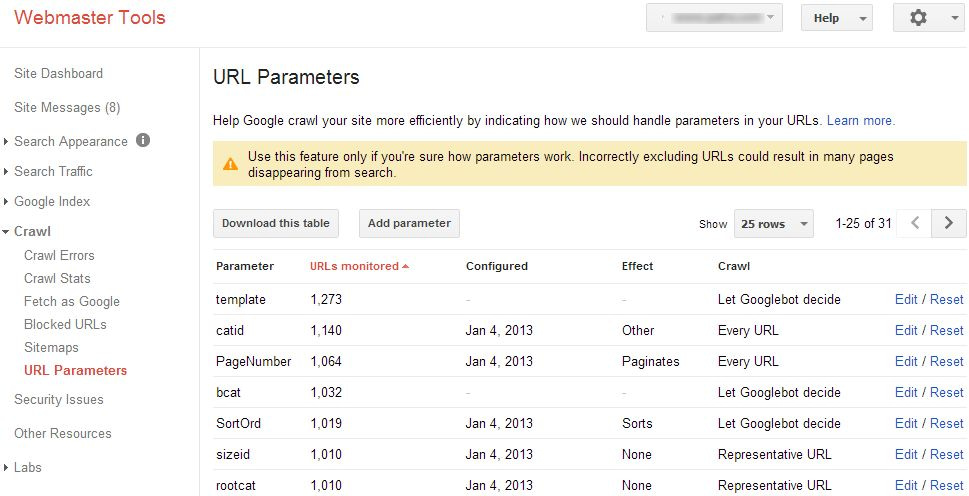

- Webmaster Tools URL Parameters: Google provides a mechanism to tell it whether it should index or ignore URLs (or let Googlebot decide if you’re not sure yourself). This is a tool that if misused, could stop pages being indexed when you didn’t intend it – so only use this tool if you are confident that you know what the parameters do and what the consequences of your settings in Webmaster Tools will be.

Other Paginated URL Formats

Of course, not all sites use traditional query strings to handle pagination. Often, because the developers will have been told that query parameters are a problem for SEO, alternatives will be in place, such as automatically re-writing query strings into a series of sub-directories (e.g. https://www.jannersmugs.co.uk/product-category/mugs-and-steins/page/2/). These aren’t a problem, because they are distinct URLs and the content of each will be different. It is debatable that if you end up with URLs that are many sub-directories deep, search engines see them as less important, but I have seen little evidence of this if the pages in question are relevant and well-optimised.

Then you have the AJAX method of pagination – loading different products into the page without changing the URL (like https://www.petsathome.com/shop/en/pets/cat/cat-food-and-treats/dry-cat-food) or using a hash instead of a question mark (https://www.stringsdirect.co.uk/c/541/strings/electric-guitar-strings-sets/#page-6). In both of these cases, you need to be very careful – we have seen several recent examples (thankfully all now fixed) where products listed on page two and beyond were not indexed, because to a search engine, there were no pages beyond the first one.

The reason for this is that in the case of Javascript links such as those used by Pets At Home, there is every chance that the search engine won’t follow them – there is too great a risk that they do something the search engine can’t handle, so they get ignored. In the case of the hash symbol in URLs, it is expected to represent an in-page anchor (link), the kind that jump you down the page. As a result, search engines don’t index the part after the # symbol as they think it means a link within the same page.

That is not to say you cannot use these forms of pagination if you want to get indexed – you will notice that both the examples above are seeing products listed several pages into their categories being indexed. You just need to ensure that you’re aware of the potential issues and consider implementing something such as HTML Snapshots, Google’s recommended solution. If you look at the actual link URL on Strings Direct (not the URL you see in the browser address bar), you will see this in action, using “hashbang” URLs that contain “#!page=”, the clue Google needs to understand that AJAX is in action (it isn’t that simple though – read the guide linked to above!)

The golden rule of thumb with any URL structure is to ensure that you tell search engines not to index versions of the page where the content is not substantially different (e.g. sort orders) or is a sub-set of content found via another URL (e.g. filtering by brand, size, price etc.) Meanwhile, you need to ensure that search engines don’t ignore URLs of pages that are different content (usually pagination).